Creating Jobs (Portal, API)

Learn how to create Jobs for data collection.

Pipeline RequiredIf you do not yet have a Pipeline created, you should create a pipeline first. The Pipeline ID and Step ID will be required for adding Jobs to the correct Pipeline and step.

If you wish to manage Jobs via API, you will need to setup the Pipeline initially in the Portal UI. After setting up the Pipeline, you can create new Jobs through APIs.

Our platform provides two convenient ways for you to create jobs: through our intuitive web Portal and via our API. Whether you prefer a graphical interface or a programmatic approach, we've got you covered.

With our platform, you can easily manage the job creation process from start to finish. Below, we'll walk you through both options in detail.

Creating a Job within Portal

Within Portal, navigate to your Pipeline. If the Pipeline is not running, you will need to deploy first. This can be done from the pipeline list view from the pipeline ellipsis or in 'View' mode of the pipeline.

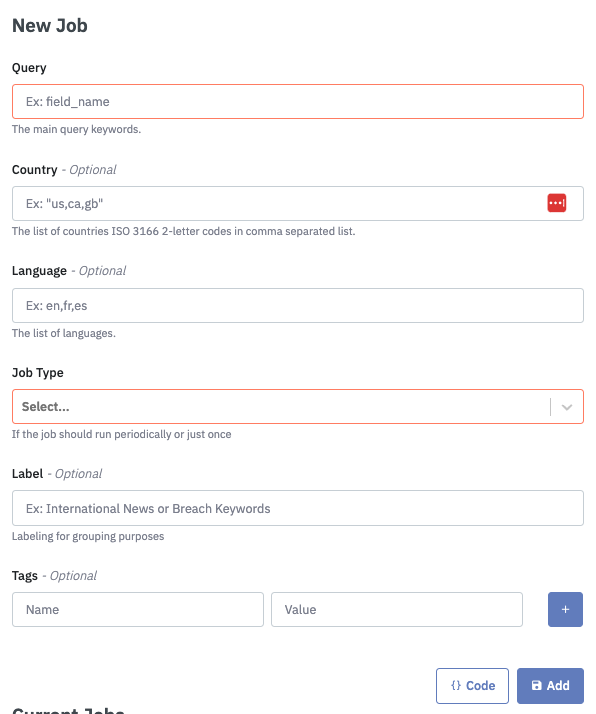

Then, you can select the 'Manage Jobs' for the Ingress Component that you wish to create Jobs for, and it will open a configuration Sidebar. Below setup you will have the ability to create a new job. This will also provide visibility of the options available for the data source that can vary depending by data source.

Example for Socialgist Blogs Ingress Component.

Jobs created will be visible below in "Current Jobs". For a more detailed view use the link "Pipeline Jobs Page"

Creating a Job Via API

API calls to create Jobs are interacting with this Pipeline Component; so it is required to have both correct Pipeline ID and Step ID.

Follow the Portal steps above to the Ingress Component "Manage Jobs" view to retrieve the Step ID and Pipeline ID which form part of the request URL.

Quick TipWhile creating an initial Job in the UI is not required, the UI provides visibility of the API request "Code" for generating your API request.

By configuring the job in the UI you can use this to provide a completed API request visible by selecting the "{} Code" button. This will provide a code snippet that you can use as a template for generating additional Jobs via the API endpoint. Selecting "Add" will manually add a Job for immediate processing.

Understanding Job Creation API Request

Here is a sample Job Creation API Request.

curl --location 'https://api.platform.datastreamer.io/api/pipelines/3f8be588/components/d5wykwcclfi/jobs?ready=true' \

--header 'apikey: <your-api-key>' \

--header 'Content-Type: application/json' \

--data \

'{

"job_name": "bf5912e9-5e09-45c8-9a61-3ea75fbc7248",

"data_source": "socialgist_blogs",

"query": {

"query": "cats AND dogs",

"country": "FR",

"language": "fr"

},

"job_type": "periodic",

"schedule": "0 0 0/6 1/1 * ? *",

"label": "Test Label"

}'URL (Required)

'https://api.platform.datastreamer.io/api/pipelines/3f8be588/components/d5wykwcclfi/jobs?ready=true' \The location is the same for each new job for that Step (Ingress Component). It contains the ID for the Pipeline, as well as the Ingress Component.

Job Name (Required)

"job_name": "bf5912e9-5e09-45c8-9a61-3ea75fbc7248",Job Name is used to identify this job in analytics and job management screens. It does not have specific requirements in format, and can be used to organize and track the names. Created jobs in the UI will have a randomly generated Job Name.

Data Source (Required)

"data_source": "socialgist_blogs",Some components may allow multiple sources, however most will only operate with a single data source. This is required.

Query (Required)

"query": {

"query": "cats AND dogs",

"country": "FR",

"language": "fr"The Query is required and contains the criteria of data collection. The available additional filters are option and can vary by source. Common filters include language, country, content type, etc. All of the available additional filters are visible in the Ingress Component 'New Job' UI.

Job Type (Required)

"job_type": "periodic",

"schedule": "0 0 0/6 1/1 * ? *",Job type specifies if the Job is "onetime" or "periodic". If you do not specify, then the Job will default to "onetime" and run a single time.

Period: This job will run on the specified schedule (format is cron expression), and collect new data between the times it has ran. If the specified schedule is less that the source supports, the schedule will use the lowest available.

One Time This job will run once and collect data from a specified time frame where supported by data sources. Time frames are determined by a "From" and "To" dates in this format: "2024-06-01T00:00:00Z"

"query_from": "2024-05-01T00:00:00Z",

"query_to": "2024-06-01T00:00:00Z"Labels

"label": "Test Label"As data is received from a Job, you can apply a Label, as a single string, to allow for additional sorting and analytics downstream.

Tags

As data is received from a Job, you can apply Tags, as an array of key value pairs.

"tags": [

{

"name": "default-folder",

"value": "test"

}

]Additionally when using Azure Blob Storage Egress, Google Cloud Storage Egress and Amazon S3 Storage Egress components tags can be used to control how the files are created:-

- Any tag can be used to specify the folder that files are created in. The Egress components can be configured to use Tag "value" for the folder name. For more information: Pipeline Egress Overview

- The tag

collation_idcan be used instead of the work id when collating documents into a single file. This allows documents generated by different jobs to be collated together.

Max Documents

Max documents is available for Jobs, and acts as a soft cap on the documents ingested. It operates by measuring the amount of documents ingested, and ceasing additional collection within that Job after the max has been reached. Depending on the number of documents returned from data requests by providers the maximum count might be slightly higher. Integers are the supported input.

"max_documents": 10000Creating Jobs in Bulk via API

Jobs API supports creating many jobs using a single API call.

- See Understanding Job Creation API Request to obtain pipeline jobs URL

- Prepare a Collection of Jobs

- Similar to previous steps on creating a single Job via API, prepare many jobs as a collection

- Create and start jobs

Example - Creating Jobs in Bulk

curl --location 'https://api.platform.datastreamer.io/api/pipelines/3f8be588/components/d5wykwcclfi/jobs/bulk?ready=true' \

--header 'apikey: <your-api-key>' \

--header 'Content-Type: application/json' \

--data '[

{

"job_name": "bf5912e9-5e09-45c8-9a61-3ea75fbc7248",

"data_source": "socialgist_blogs",

"query": {

"query": "dogs",

"country": "FR",

"language": "fr"

},

"job_type": "periodic",

"schedule": "0 0 0/6 1/1 * ? *",

"label": "Job 1 Label"

},

{

"job_name": "8709610B-93AC-48BD-990B-BA3249F561D0",

"data_source": "socialgist_blogs",

"query": {

"query": "cats",

"country": "FR",

"language": "fr"

},

"job_type": "periodic",

"schedule": "0 0 0/6 1/1 * ? *",

"label": "Job 2 Label"

}

]'Updated 7 months ago